Preamble

This post presents a collection of thoughts covering some of the idea space I've developed over nearly ten years of using Tableau,

largely framed within the context of its existence of a data analytical tool suitable for use in the modern enterprise.

There's no real intentional organization; although there are commonalities, themes, and overlaps, no narrative is intended.

Some of the nuggets and seeds have been fleshed out to some degree in working notes, but not to the point where I'm comfortable publishing them.

Part of the purpose of listing them here and now is to experiment, get them out and see if it helps stimulate generating a broader synthesis.

One of my motivations is that I've become increasingly concerned with the direction the field I've made my profession is taking.

At heart I believe that data analysis should be part of everyone's intellectual toolbox, that being able to explore the data relevant to one's area of interest provides the opportunity to achieve a deeper and richer understanding of the state of things.

We use tools to augment our intellect—our knowledge and expectations of our environments.

Data analytical tools are, or should be, like other tools: providing useful functional features that maximize their usefulness while minimizing the concessions the person using it must make.

What's the Point?

A Brief History of Computer-Assisted Data Analysis

– FORTRAN, COBOL, 4GLs (e.g.

FOCUS,

RAMIS,

Nomad), {the dark ages}, ... Tableau, ...?

– terminals, line printers, GUIs, touch-sensitive surfaces, ?

Data Analysis is Not Just Visualization

or,

Visualization Is (Only) The Thin Outer Layer of Analysis

"Visualization" is the currently popular term, used far and wide as the tag for the new wave of tools, technologies, and activities that provide the means to access and present data in a form that people can interpret and derive information from.

But it's misleading, a gross simplification that ignores the primary role context plays in forming and communicating data's information value.

"123.45" is a data visualization

just as much as is this bar,

and "123.45" is more effective in communicating the quantity to boot

decimal notation using the Arabic-Hindu numerals

is in this context a time-proven, highly effective method of visually representing numeric quantities to an arbitrary level of precision in a small space

thinking that quantitative data visualization is restricted to geometric forms is a handicap

Decision-Making Benefits From Analysis of Available Data

Data Analysis is (or should be) a Cognitive and Intellectual Skill

supported as much as possible with tools and technologies that augment, not inhibit, or erect unnecessary barriers to human abilities

unfortunately, there are forces that continually work to shape data analysis as a primarily technical undertaking—these forces are to be resisted,

but must be understood in order to be overcome

For Whom the Tool Toils

data analysis is for everyone, not just the executives at the top, or the line-of-business people on the surface

The Right Tool for the Job

Why Only Tables?

Tableau can only access and analyze data in tabular form.

(with the limited exception of cubes, which are infrequent targets for Tableau analysis, perhaps because Tableau's analytically retarded here)

To the best of my knowledge the decision to restrict analysis to tabular data has never been explained.

But, it's a severe limitation, and one that is difficult to understand.

Granted, at the time Tableau came into existence, and Polaris before it, organizing data had become the de facto norm.

There are a lot or historical reasons for this, a discussion of them is beyond the scope of this post.

But the truth is that table-based data organizing, even when Relational (and by far most real world systems aren't proper Relational models),

is a terrible way to store data for human understanding (and I've had some people take real umbrage at this statement).

Historically, before tables became the norm, data was stored in structures that matched the information model the data was persisting information about.

Hierarchical databases were everywhere, and network databases weren't so rare as to be alien.

Even more relevant to this discussion, the 4GL data-analytical tools from the 1970s could understand these structures and analyze them correctly in context, providing the human-correct results.

In the present, the growth in the number of non-tabular data sources that people are interested in exceeds that of standard tabular sources.

NoSQL, JSON, XML, YAML, and many other formats and structures have emerged and taken root.

Tableau's inability to recognize and analyze non-tabular data leaves a huge hole in the data analysis tool marketplace. Who's going to fill it?

No One Ever Got Fired For Quoting Gartner

Back in the way back, when IBM dominated the business automation universe, when mainframes ruled the roost the conventional wisdom held that "No one ever got fired for buying IBM".

There's a similar knee-jerk reflex conditioned into today's executive managers responsible for selecting strategic information

technology for their organizations—they rely upon Gartner, particularly it's Magic Quadrant, and similar research firms

to tell them which companies are positioned where in the marketplace.

Many, too many, managers take these fora as trustworthy guides for their purchasing decisions, essentially abdicating some level of responsibility for conducting their own

research into the technological space wherein may lie tools, products, etc that could be useful and valuable.

(the validity and shortcomings these market analyses is well documented and argued elsewhere)

Getting recognition in these fora is a huge leap up the food chain for vendors.

Being identified as a viable product in the Magic Quadrant is a threshold event that exposes the vendor and product to the widest, deepest-pocketed audience/market in existence.

Being recommended is a quantum leap forward.

Which is all fine and good if one's trying to build one's company into the largest, most lucrative entity possible.

But.

Is it that what a truly innovative company interested in providing the best possible product that helps the greatest number of people should be striving for;

to simply grow, and grow, and grow?

Just analyze it.

Many organizations that are trying to adopt Tableau as a BI tool or technology are going about it the wrong way.

They're using Tableau in the traditional SDLC model, and are as a result missing the greatest part of the value Tableau offers.

Software isn't material, so there's little cost to trying something to see if it works.

Experiments can occur almost at the speed of thought, dramatically closing the gap between conception and creation

The traditional approach of concept, analysis, design, build, deliver is mired in a model of work

that's rooted in the industrial production paradigm that underlies modern business management theory and practice (I have a BBA and MBA).

This model worked extremely well during the industrial age, when producing large numbers of physical goods was the organization's work.

It's not only of little value in the non-physical world, it is actively detrimental to the good conduct of work that's predominately centered around fluid cognition and creation.

Tableau's Arc

Tableau has occupied a shifting position in the data analysis pantheon over the past decade. This is the story of my experience with it,

the ways in which it's been employed, and its presence in the broader worlds in which its taken root.

Thinking About What Makes Good Data Analytical Tools

looking to Bret Victor's Learnable Programming

...two thoughts about learning:

- Programming is a way of thinking, not a rote skill. Learning about "for" loops is not learning to program, any more than learning about pencils is learning to draw.

- People understand what they can see. If a programmer cannot see what a program is doing, she can't understand it.

Thus, the goals of a programming system should be:

- to support and encourage powerful ways of thinking

- to enable programmers to see and understand the execution of their programs

There's a lot to learn from these thoughts, directly relatable to using technology for data analysis. For example:

Data analysis is a way of thinking about the nature of things: their identities, quantities, measurements/metrics, and relationships to other things.

Learning about the technical properties of specific technical implementations of analytical operations is not learning about data analysis.

If people cannot see into the machine, it's very difficult to achieve a robust understanding of what the machine is doing,

how it does it, how to imagine the things it can do, and how to set it up so that it does what one wants.

Design Rot: Tableau's Usability Has Stalled

For years, Apple followed user-centered design principles. Then something went wrong.

In their article

How Apple Is Giving Design A Bad Name

Don Norman and Bruce Tognazzini argue that Apple has abandoned the principles of usability in its product designs

in favor of a beautiful aesthetic experience

that hinders rather than helps users' ability to accomplish the things they want to.

In its own way, Tableau has similarly failed to continue to pursue the same elegant usability at its heart.

When Tableau appeared its design was revolutionary, providing simple, intuitive objects,

and interactions with those objects, that surfaced data and fundamental data-analytical operations,

very closely matching a the human view of a particular and useful analytical model.

This was Tableau's genius.

For the first time people could -do- simple, basic data analysis with a tool that made it simple and easy.

Since then, Tableau hasn't followed through with its initial promise.

The simple and easy things are still simple and easy, but pretty much everything else beyond this space is

too complex, complicated, confusing, obscure, and unnecessarily hard to figure out.

The list of Tableau's design faux pas has become too large to catalog.

At one time I had hopes that Tableau would recognize it had accumulated too much bad design and take steps to remedy the situation.

I no longer believe this; Tableau appears to have invested so much into its current way of doing things,

and reaped so many rewards for doing things the way it has, that there's no motivation or incentive for it to change its stripes.

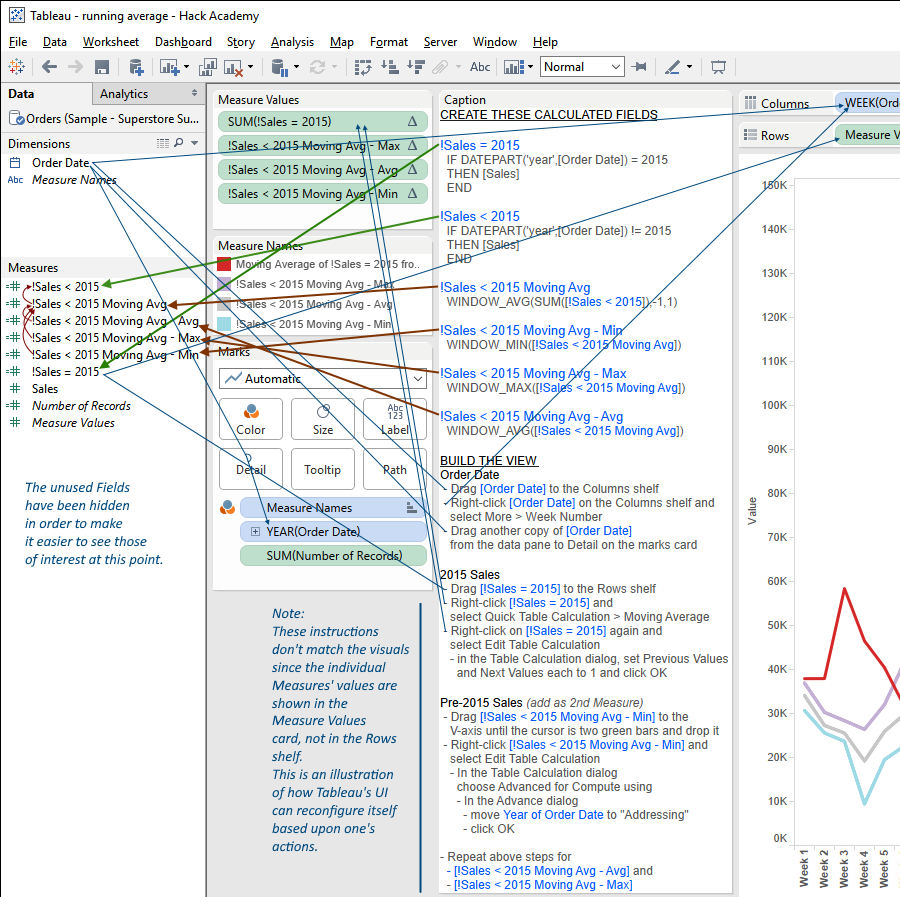

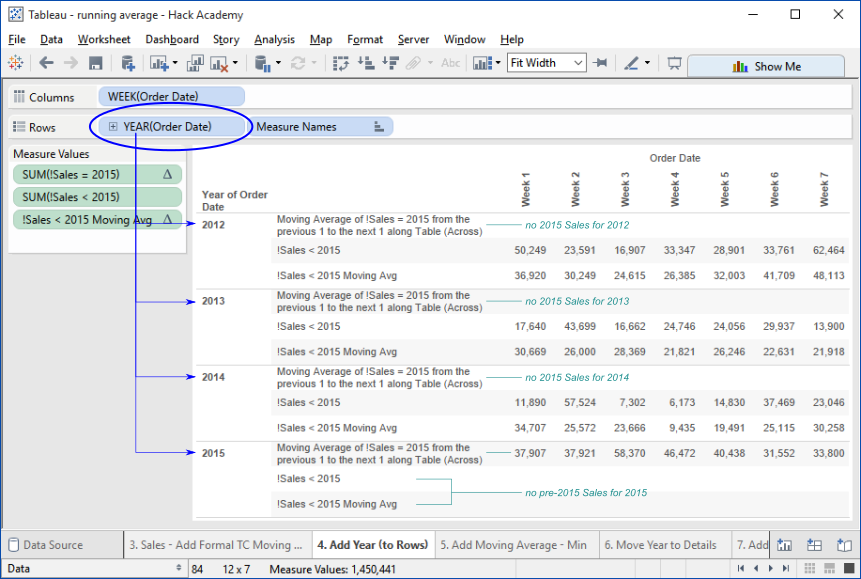

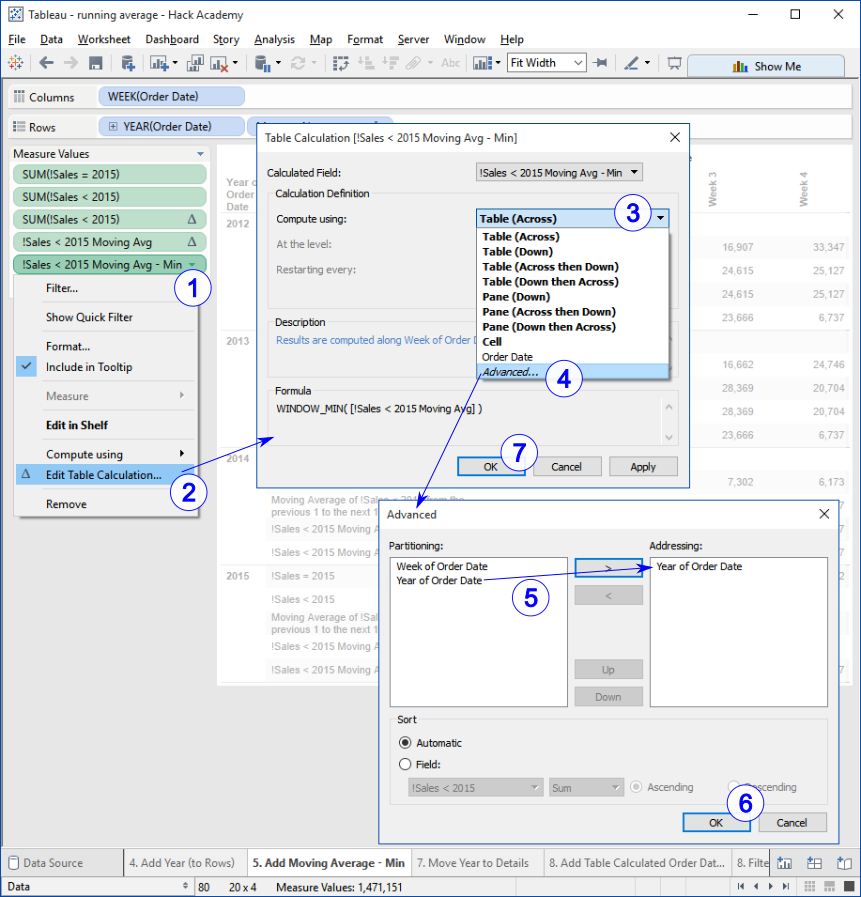

Side Effects, Tips, Tricks, and Techniques: Useful Aids or (Un)Necessary Evils?

Does the need to learn, master, and employ these to accomplish useful analytical effects add or detract from Tableau's overall utility and value?

It's not a simple black or white situation, but there's an inverse relationship between the quantity and complexity of the technical things one needs to learn to accomplish useful things

and overall utility. The more tricky things one needs to know, the harder the tool is to use from a human perspective, and the further from the primary goal one needs to work.

On the Consequences of the Exaltation of Complexity

what happens when mastery of arcane technical matters is elevated and praised above sense-making

Tableau's Salad Days

The Perils of Ossification

what happens when a tool freezes, welding into place aspects that could be improved through continual evolution

Suffering the Innovator's Dilemma

or,

The Rise and Fall of a Disruptive Innovator

Beware the Cuckoo's Egg

considering the consequences when one tool pushes out others

Yes, You can do that in Tableau. So what?

just because it can be done, should it be?

Tableau is a terrific tool for accomplishing basic data analysis quickly and easily, and for communicating interesting findings, also quickly and easily.

There are, however, limitations in what Tableau does simply and easily, and more limitations in what Tableau can be coerced into doing.

When faced with situations where needs fall outside Tableau's capabilities,

or where the effort to satisfy the needs with Tableau exceeds the effort to satisfy them with another tool or technology,

it's a good idea to at least entertain the notion that Tableau should not be used.

Velocity, Value, Volume

Pervasive Data Analysis - a Promise As Yet Unfulfilled

it's been over thirty years since the idea of making analysis of one's own data possible and as seamless and easy as possible surfaced

there was a flourishing of the concept for a while; business people could analyze their data with minimal involvement and support from their data processing organizations, br />

but it didn't last

there was a decline

along with a dramatic increase in the size, wealth, and power of the database and BI platform vendors

a decade ago Tableau appeared, and made it possible for nontechnical people to access and conduct their own fundamental data analysis,

achieving previously unthinkable insights immediately with little or no technical intervention or support—it was a revelation,

and carried the hope that pervasive data analysis could become a reality

so... why haven't things progressed all that much in the ten years since?

Self-Service BI – it ain't what you think

Anecdote:

Several years ago I was talking to a friend, a Senior Information Officer at The World Bank about Tableau's benefits, how it had the potential to change everything,

describing how it could, if adopted effectively, be the path to helping 'ordinary' people obtain the insights and information from the data that mattered to them,

much of which lay outside the boundaries of the Bank's institutional data hoard.

Her response was that they didn't need it, their needs were being satisfied through the self-service Business Objects environment they'd set up.

As it turned out, the BO solution didn't gain much traction.

Deliver Value Early and Often

Do the Right First Thing First

or,

Start With Data Analysis

it seems obvious, almost not not worth mentioning

but far too many BDA efforts ignore, or are ignorant of, the opportunity to start with data analysis,

often in the mistaken and disastrous belief that data analysis is something that happens after precursor activities take place

You Can't Start With Everything

if you try to, you'll never have anything

or,

The Big Bang Delivery Model is a Recipe for Failure

Diminishing IV/E

the effort to achieve information value from data increases more than linearly,

or,

it gets harder and harder to obtain the next level of value from data, along multiple dimensions

Does Deep Tableau Expertise Lead to Diminished Value?

do the demands and difficulties involved in developing the skills necessary to wield Tableau successfully across a broad spectrum of analytical purposes and outcomes

detract from the value that could be delivered with it?

Beware Re-branded Big BI

several years ago it wasn't uncommon for Tableau advocates to contrast Tableau to Big BI

Tableau was seen as the 'anti-BI', the human-oriented tool that would be an antidote to Big BI's ills

then Tableau gained traction, became better-known, then popular; it surfaced into the corporate executive mindspace through reviews including Gartner, Forrester, etc.,

and to some extent from the bottom up as people discovered its benefits and used it to good effect

once this happened things began to change

traditional vendors started to come out with data visualization components and tools as their "New!", "Improved!" offerings, trying to capitalize on the market Tableau had pioneered

the Data Warehousing folks, the very same ones who had, for almost two full decades, been preaching to the faithful of the need to devote themselves to building

enterprise-spanning industrial-strength universal conformed data repositories and associated answer-all-questions analytical platforms,

crashed the party declaring their allegiance with the new agile, adaptable BI world

but they were still selling their same old wares with a fresh coat of paint slapped on

Don't Struggle Alone with Your Data Analysis, Struggle with Tableau

Tableau is Bleeding

Contemplating Complexity's Consequences

some problems are inherently complex, but the means of addressing them should be no more complicated than necessary

Baroque is Broken

ornamentation and elaborate constructions can be superficially attractive but they are often at odds with, even detrimental to, real usefulness

Tableau's Data Blending: is it really a Good Thing?

legend has it that data blending was a hack by one of Tableau's developers; true or not it has the feel of one

hack or not, it's a very useful mechanism, and has been leveraged by very clever people to achieve all sorts of very useful analytical outcomes

but... it has limitations that leverage its usefulness in many real world situations—the question here is to what degree they render it irrelevant for real world purposes

Misalignment of Focus

Analytical Types

– Curiosity vs Confirmation

– Explainers vs Confirmers

– Explorers vs Justifiers

Zombie BI

we thought Big BI was dead, or at least on life support, but it's showing signs of resurrecting

Enterprise Data Analysis is Fractal

self-similar at all scales

All Data is Valid

or,

There's no bad data,

but much of it is misunderstood and/or it tells unpalatable truths.

Scaling Mount Simplicity

keeping things simple isn't easy, but it's worth striving for

Whither Data Analysis Tools?

Rethinking the Data Warehouse

storing, safeguarding, and provisioning an enterprise's data isn't what it used to be

(and the traditional model didn't work all that well anyway)

Your Tableau Are Doomed

Pursuit of Maximizing Market Potential Considered Harmful

or,

Maximizing Market Potential, a Cautionary Tale

The New Hope Fades

Entropy in the Tableau Universe

Clarity, Coherence, Completeness are Virtues

determining whether data analytical efforts are worth pursuing

Development is Best Served in Minimal Portions

determining whether data analytical efforts are worth pursuing

Development is the technical implementation of someone else's ideas

using a traditional SDLC as the default approach to providing actionable information to business decision makers is a very bad idea

(unless you're a software vendor or otherwise benefit from the expenditure of too much time, money, attention, energy, and other resources)

Requiem for a Once Noble Tool

Once upon a time a very clever young man thought up a new and improved way for nontechnical people to analyze their data.

This required a re-conception of "data analysis", moving away from the prevailing paradigm of technologically-centered programmatic creation of artifacts that, hopefully, conveyed useful information.

The young man's innovation took a different approach.

Tt provided mechanisms that tightly coupled the basic data analysis operations:

field selection; data filtering; sorting; and aggregation, with intuitive system-presented data and analytical operation avatars familiar to 'real' (i.e. nontechnical) people.

These mechanisms, when combined within the operational environment by these people, caused the system to generate and present the appropriate analytic.

This was a revolution, and it changed everything.

For the first time people could interact with their data directly, without enlisting the assistance of other people with specialized technical skills, and do their own data analysis.

For the first time there was the very real hope that things would continue to get better, that with the barrier to data analysis now breached the breadth, depth, and reach of human-centered data analysis tools would continue to blossom.

There were limitations, of course, as there always is in the first conception of a solution to a simple subset of a very large, intrinsically complex problem space.

Data analysis is almost unbounded in it's full range, from the types of things that can reasonably be thought of as data, to the analyses that can be conducted.

One rough analogy is to consider the initial tool as providing arithmetic functionality and the full potential space the full range of mathematical analysis, e.g. number theory, calculus, etc.

Sadly, the goodness failed to fulfill its promise.

It stalled out early.

Instead of continuing to expand the realm of easy, nontechnical, human-centered analysis into the analytical universe it expanded its functionality by adding technical, deeply mysterious features that left mere 'real' people on the outside looking in.

And in due time it became, first, something some people had some familiarity with, then one that some had heard of, then a ghost of a past that didn't matter to most people.